How to upgrade your Flask application using async

Flask app implemented with async.

Chances are, you aren’t getting everything out of your Python web server.

Long before Python 3, and ChatGPT, and TikTok, poor developers had to write their web servers using *shudders* Python 2 native features. This meant no asynchronous operations. The frameworks that arose from this era proliferated and became cornerstones of web development. There might even be a synchronous Python web server showing you this text right now. But things change, and with Python 3’s native support of asynchronous operations came a new standard - Asynchronous Server Gateway Interface (ASGI).

There are now new(er) frameworks that implement this standard, like quart and FastAPI - but many developers still default to their familiar synchronous predecessors. The goal of this article is to demonstrate the benefit asynchronous web servers can have on your application performance.

This article will load test web servers in Python, For additional information on load-testing with Locust, please see this article.

Who Should Read This?

Anyone who is looking to create a Python web server (or who maintains an existing one) that is either network bound or IO bound. Or just anyone looking to learn about synchronous and asynchronous web framework paradigms!

First things first - the Synchronous approach

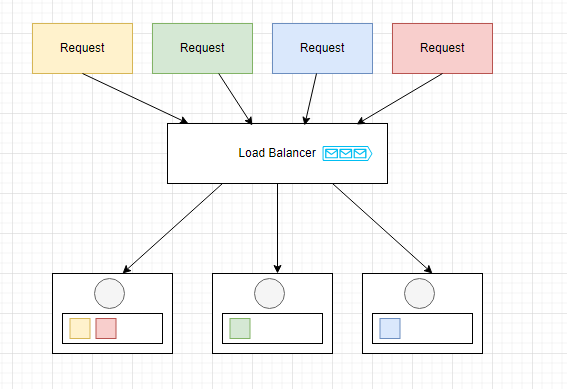

How do synchronous Python web servers even work? The server will use the underlying operating systems threads and processes to handle requests - and in doing so achieves concurrency. Here is a diagram of how you might imagine one:

The web server essentially load balances incoming requests to workers - which will typically exist in separate threads. To support the case where all workers are busy, the web server might have an internal queue to store the requests in a FIFO’ish order.

In this design, the number of threads inherently limits the amount of concurrency you can provide. If the work is CPU bound - then this is a fine setup, but if the work is IO bound or CPU bound - then limiting your concurrency equates to limiting your maximum throughput. Allow me to demonstrate with a simple example.

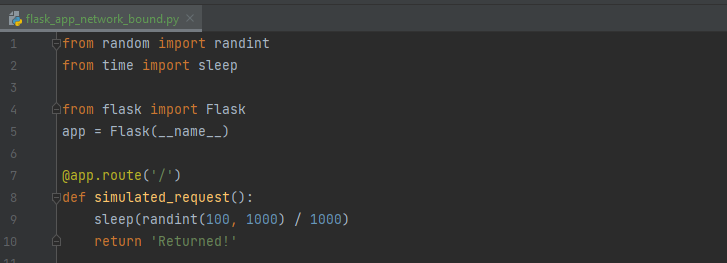

Let’s first create a simple synchronous web server that simulates a network/IO bound workload, we’ll use the Flask framework served with waitress for this.

Our application will return in anywhere from 100 to 1000 milliseconds - a fairly realistic network bound scenario.

Simply run with “waitress-serve --port=8000 flask_app_network_bound:app” to start the server.

Now, let's ramp up the load using Locust. See my previous article for some more details on getting up and running with Python load tests.

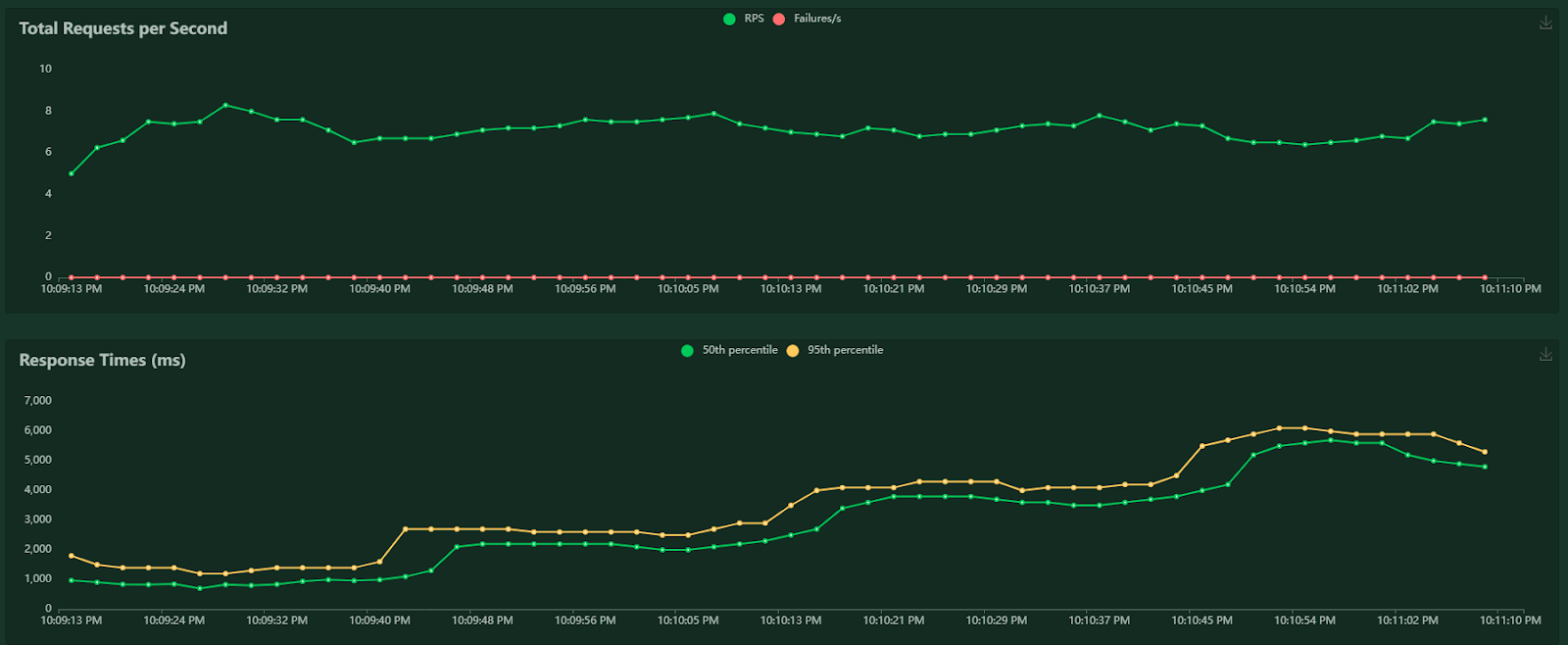

For now, we will step up the load by around 10 TPS every 30 seconds.

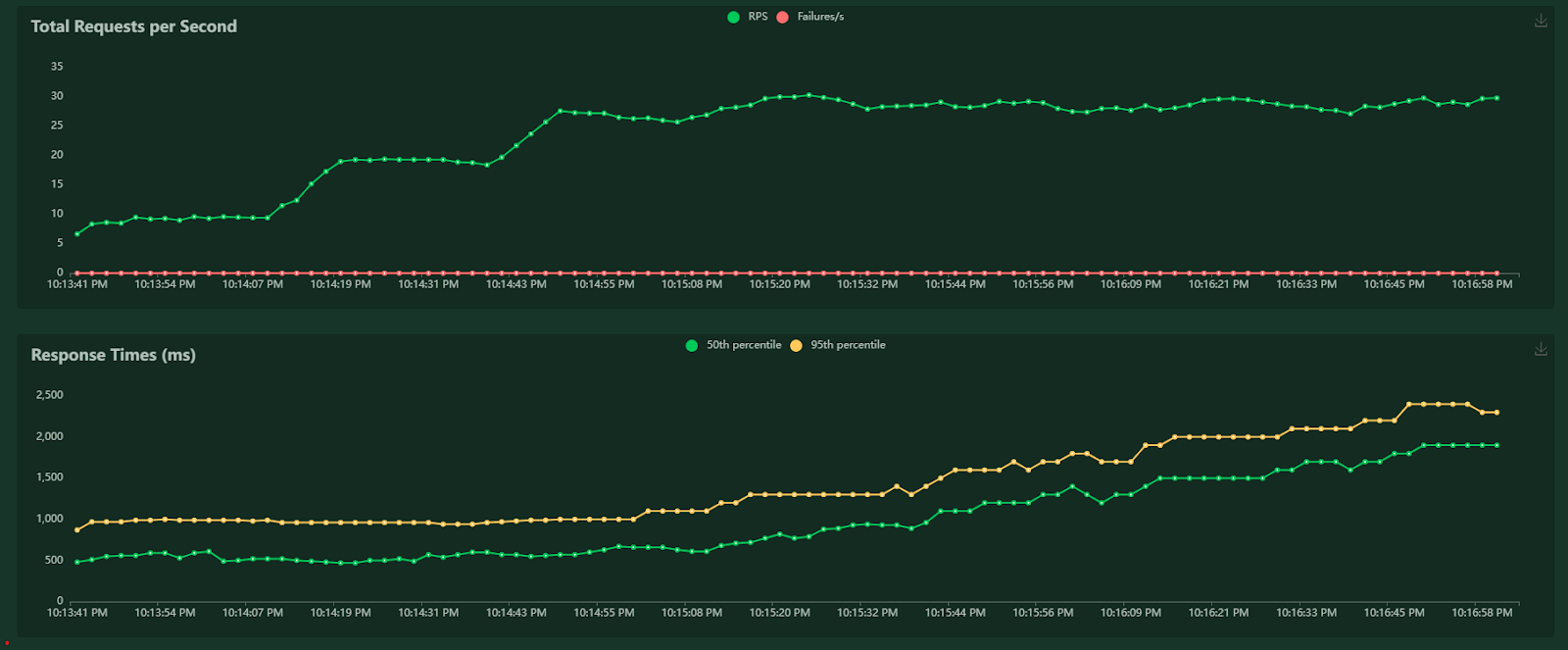

With the default server configuration (4 threads), we can only get around 7 TPS, and latency increases linearly as more and more requests wait in the queue for an available worker.

With 16 threads, we can stave off the inevitable up to a predictable ~30 TPS.

Alright, so we can give the server more and more threads and achieve more throughput. That’s expected - but since we have a network bound application - we really don’t need to be keeping a thread busy while we wait for the network, there must be a better way.

Enter Async.

Asynchronous Web Servers

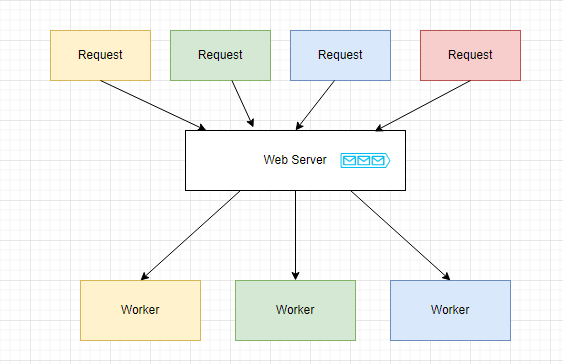

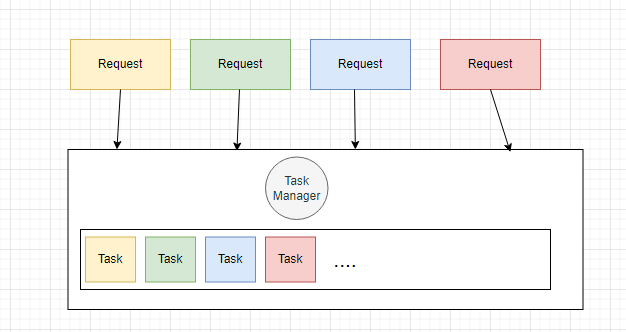

Asynchronous web servers are fundamentally different in how they handle requests and concurrency. They will run a main process - essentially a task manager, that schedules requests to be executed. The key distinction here is that tasks will return control to the task manager when they need to wait for asynchronous work - like in the case of an expensive network call. This enables the task manager to achieve very high levels of concurrency - since when a task returns control, it can schedule additional tasks. Under this architecture, hundreds or even thousands of concurrent tasks may exist at one time. This architecture might look something like this:

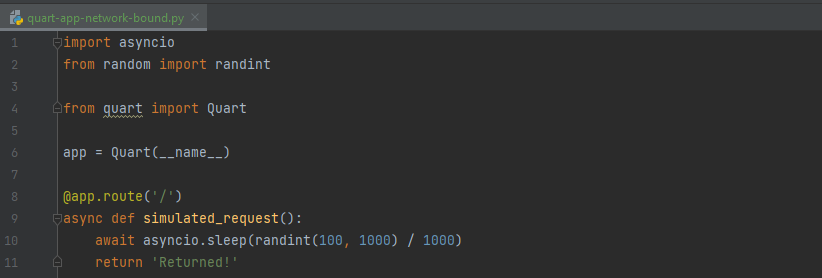

So how do we implement this in practice? Quart is a fast asynchronous python web framework and a good starting point - with semantics purposefully similar to Flask. Our simple synchronous application from before is now implemented like this:

Notice how our request handler is now “async” and our network bound call is annotated with “await” - these keywords allow our asynchronous server to do the magic I explained before. For more Python async details - take a look at the asyncio docs.

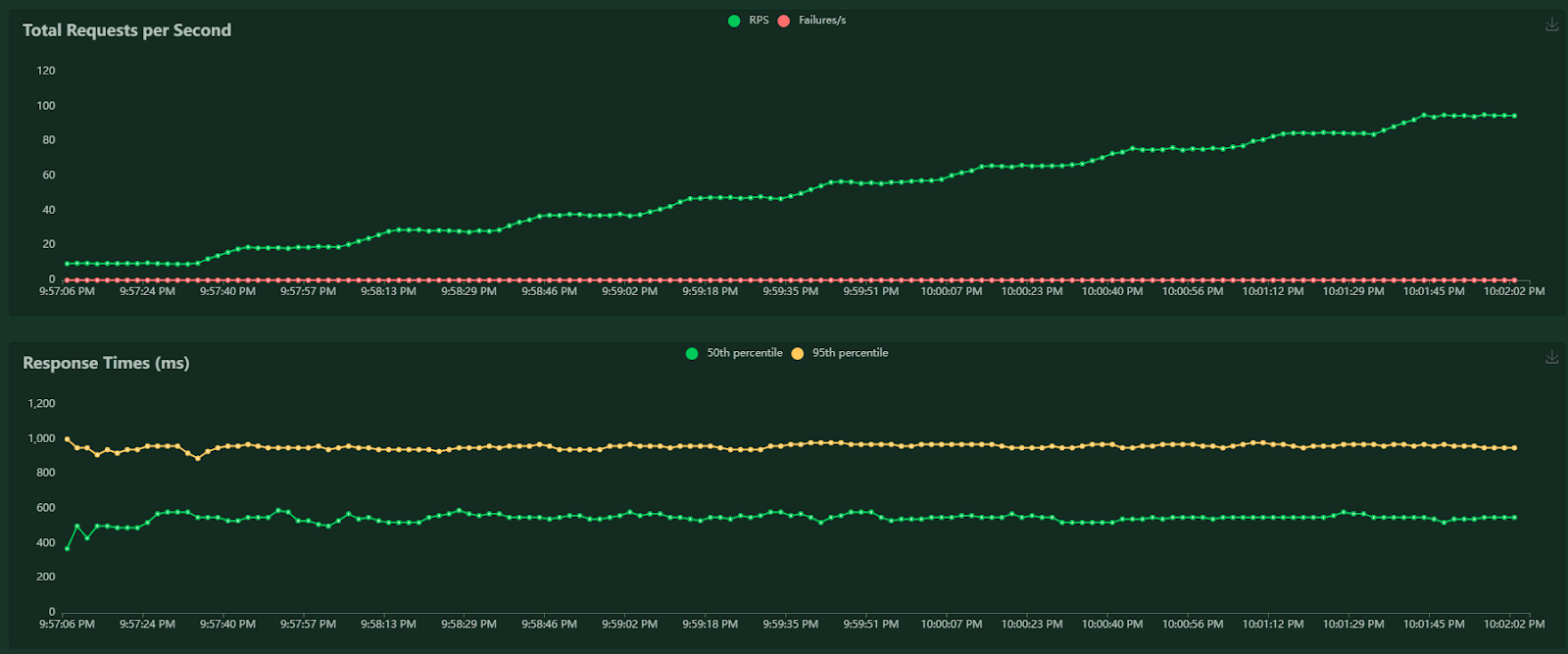

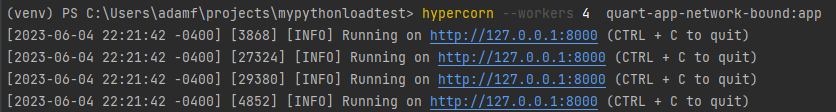

Lets run the same test as before with a default hypercorn web server configuration - “hypercorn quart-app-network-bound:app”:

We get up to 100 TPS without breaking a sweat. This is a testament to just how effective async servers can be with heavily network/IO bound workloads.

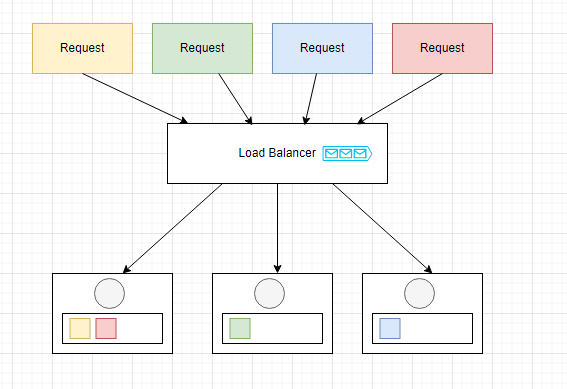

It gets better though, remember how I mentioned that the task manager was running in a single process? Well we can take advantage of our multiple CPU cores by spawning multiple parallel workers.

We now have 4 separate process IDs, and our architecture diagram looks something like this:

We are combining the best of both worlds - with processes able to perform all of the same async techniques we previously discussed.

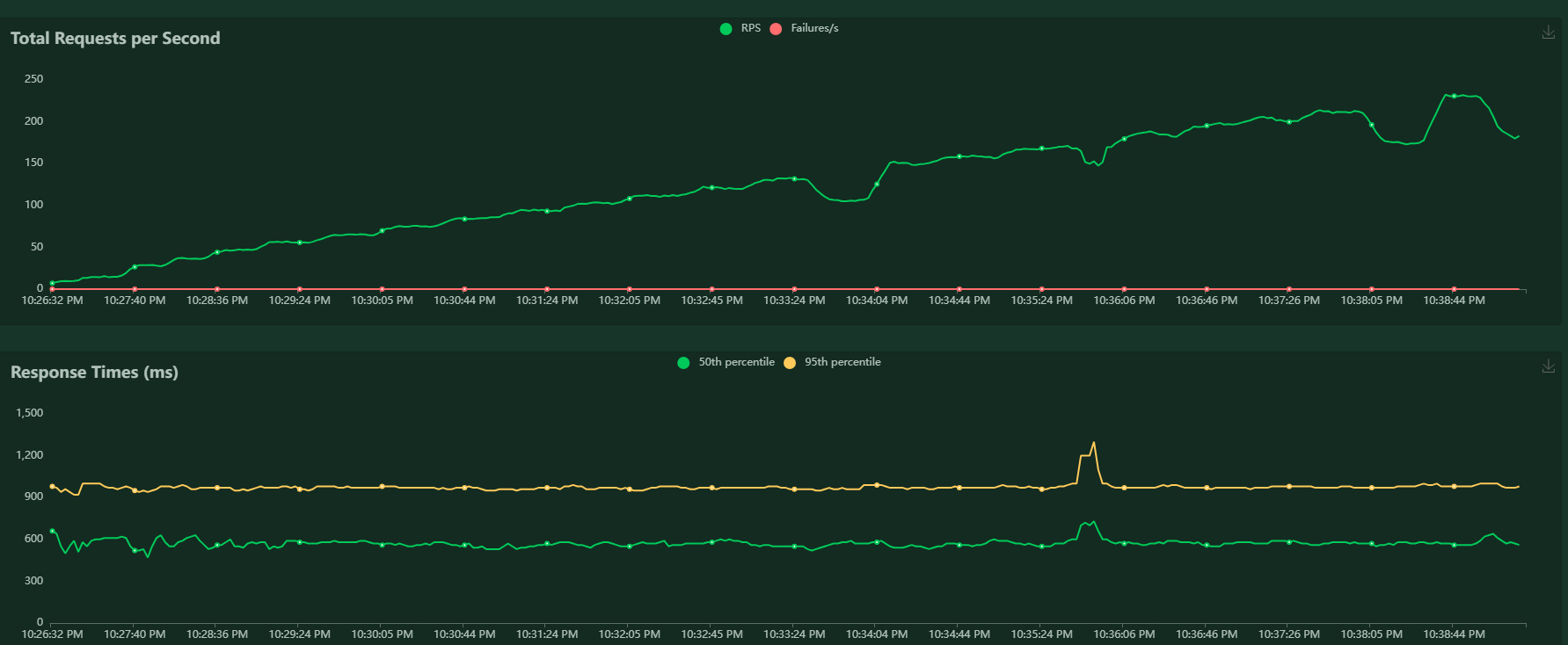

All that’s left is to take this for a spin with another load test:

A leisurely 250 TPS without any smoke from our web server - (a couple latency spikes, probably when my system loaded a web page or something).

Conclusion

In this article, we explored synchronous and asynchronous Python web servers and showed that for heavily IO/Network bound workloads, the (relatively) newer ASGI compliant web servers can be far more performant than WSGI servers.When building your next application - strongly consider what your applications profile is and whether you may benefit from async.

To see the code used in this article, visit the Github repo.

References

- https://docs.python.org/3/library/asyncio.html

- https://hackernoon.com/3x-faster-than-flask-8e89bfbe8e4f

- https://medium.com/super/how-we-optimized-service-performance-using-the-python-quart-asgi-framework-and-reduced-costs-by-1362dc365a0

- https://pgjones.dev/blog/fastapi-flask-quart-2022/

- https://github.com/pallets/quart

- https://pgjones.gitlab.io/hypercorn/tutorials/installation.html

- https://pgjones.gitlab.io/quart/tutorials/asyncio.html

- https://www.infoworld.com/article/3658336/asgi-explained-the-future-of-python-web-development.html